Preparing for take-off: How AI will turbocharge Translation Engines

For some time now, we’ve been using Translation Engines to provide the best possible translation for a given piece of text. With the advent of Artificial Intelligence, we can now take that service to the next level. Although generative AI is not ideally suited to machine translation, by sprinkling some magic linguistic asset dust on it, we can use it to generate translations that respect the required style, tone, terminology, and just about anything else required for a particular project. Let’s explore this more below with a history lesson, an animated movie, and a promise of better translations to come.

A brief history of Translation Engines

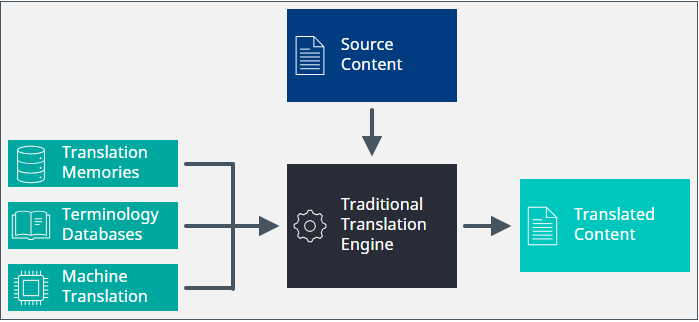

Back when our new cloud platform was being created, we decided to take a new approach to the way that linguistic assets were organized and used. Traditionally, each linguistic asset was treated in isolation and there was clear separation of duties for Translation Memory, Terminology, and Machine Translation. Our goal back then was to bring these assets together to create a synergistic service that would provide the best possible match based on all the information to hand. Gone were the days of applying a Translation Memory, mopping up any untranslated text with Machine Translation, and then providing translators with terminology when they were editing the translated text. Instead, our vision was to be able to maximize the effectiveness of the assets to create the “best possible match” that we could. This was the birth of the Translation Engine.

Some of you may have seen the Aardman Animations film called Chicken Run. It featured a rather gruesome machine that would turn chickens into pies. Quite how the chickens became pies was never shown or discussed – it was a mysterious and ominous machine but undoubtedly a triumph of engineering. Mrs. Tweedy coined the phrase “chickens go in, pies come out.” What’s this got to do with translation you ask? Well, I always thought that a Translation Engine was a similarly mysterious machine (and hopefully not quite as gruesome or ominous). Instead of chickens, we fed it text; instead of pies, we got translations. “Text goes in, translations come out.” You wouldn’t need to know how it worked but you could rest assured that the linguistic assets were being squeezed for every bit of information we could use and that the output was the best possible translation we could make. The diagram below shows this “mysterious box” approach.

Some of the “tricks” we use are exactly what you would expect from such an approach. They include some traditional AI technologies but are not limited to:

- Fragment matching

- Fuzzy match repair

- Prompting machine translation with applicable terminology

All well and good you might think. Nice idea. Well executed. Handshakes and back slaps all round. Then 2023 rocks up and says “Hey, guess what guys? AI is here!” The spokesbots for the AI revolution have been those lovely generative chat models that we can ask to write poetry, create a press release, or pen a new catchy LinkedIn headline. OpenAI seemed to steal the march on everyone but then others came along. Google, AWS, Meta, and more have already thrown their hats into the ring. You can also be sure there’s a bunch more coming over the hill; the horses’ hooves can be heard already. AI is here and here to stay so what are we going to do?

Time for an upgrade

The rise of AI has given us an opportunity to tune-up and turbocharge our Translation Engines. When before we were almost at the limit of what we could squeeze out of those linguistic assets, now we can do so much more. These chatbots are loosely all using the same technology. Huge amounts of linguistic data are analyzed and processed to create something known as an LLM. [Side note for linguistic geeks. LLM is not an acronym but an initialism. Acronyms are pronounced as a single word; think NATO, SCUBA, TASER, and YOLO. Initialisms are spoken as individual letters; think DNA, OMG, TBD, and FAQ. MPEG and JPEG are just outliers!] Back to the LLM and some of you might say “what’s that?” Well, it stands for Large Language Model and, in our industry, that word right there in the middle is very, and I mean VERY, interesting. These LLMs will allow our Translation Engines to throw off the shackles and unleash powerful new functionality that we can embed right in the middle of our translation technology offerings. Things that an LLM can help with include:

- Translating text from one language to another having been given contextual information and guidance.

- Post-editing translations that have been created by Neural Machine Translation.

- Evaluating the quality of translated text and suggesting improvements.

- Re-writing source text to follow company style and terminology.

Fixing up machine or human translations will come soon but, right now, we’re focusing our efforts on the first bullet point in this list. We’ve already released an OpenAI Translator app that you can use in Trados Studio. This uses an LLM to transform, optimize, analyze, and suggest alternative translations for text in documents and it has support for prompts. I won’t go into too much detail here but it’s worth taking a look at the Wiki page on the RWS Community to get an understanding of how you can manipulate the prompt used to get different styles, tones, and lengths. What it doesn’t have, however, is access to all those lovely linguistic assets that we hold in a Translation Engine. That’s the secret sauce.

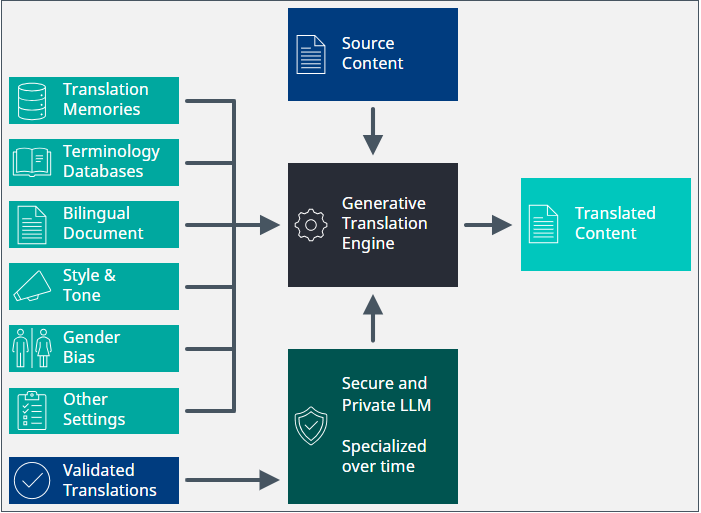

Using AI, our Translation Engines can now produce better translations than ever before. To translate a sentence from one language to another, we can feed the LLM with information about:

- How other segments in the same document have been translated.

- How similar segments were translated in the past (re-using fuzzy match technology).

- Which terms have been identified in the source text and how they should be translated.

- The required style of translation (formal, informal, friendly, professional etc.)

- Other settings like maximum length or gender-neutral language.

With all this information to hand, the LLM can give us exactly what we need. Moreover, given the typical workflow of a translation project, we can “teach” the LLM and make it better. By feeding back the final, verified translations, the model will learn and make better and better suggestions over time. Super. We’re passing your content to a publicly available LLM and getting back high-quality translations. Happy? No? The “publicly available” thing is making you nervous? Fear not – I’m sure those nice people at any one of those companies I previously mentioned won’t be leaking all your Intellectual Property all over the web. Still not convinced? OK then; how about we do all this in a secure and privately hosted LLM? That way you can be confident that your data remains yours alone. The diagram below shows our new Translation Engine. No chickens will be hurt.

Rolling this out

Our initial implementation takes the form of a new task that can be included in a custom workflow for Trados customers who have access to our powerful workflow editor. This will give users an early chance to experience the possibilities that our new approach brings. As we move forward, we’ll include it in the core functionality to make it accessible for everyone. Keep your eyes and ears open for more news through our community and our social media channels.